Timely Portfolio

Thursday, March 23, 2017

Picking the Top

Wednesday, March 15, 2017

Puts as Protection

Many asset management firms are happily enjoying record revenue and profits driven not by inorganic growth or skillful portfolio management but by a seemingly endless increase in US equity prices. These firms are effectively commodity producers entirely dependent on the price of an index over which the firm has no control. The options market presents an easy, cheap, and liquid form of protection in the form of puts with which the firm could hedge its revenues, its clients, or both. However, many of these firms in blissful disregard for the brutality of asymmetric arithmetic choose to ignore this opportunity for protection.

Here is some very quick and ugly code with which anyone can evaluate various put options for the hedge and their potential outcomes. I hope someone, somewhere might benefit from the idea

Load Our Helpful Packages

library(quantmod)

library(purrr)

library(dplyr)

library(pipeR)

library(tibble)Use quantmod To Gather Data

getSymbols("SPY")## [1] "SPY"spy_opts <- getOptionChain("SPY", Exp = "2017-12-15")Construct Simple Hedged Portfolio

outcomes <- spy_opts$puts %>%

tbl_df() %>%

filter(Strike >= 230, Strike <= 260, Ask > 0) %>%

mutate(

spy_pos = floor(100*tail(SPY,1)[[4]]-100*Ask),

option_pos = ceiling(100*Ask)

) %>%

select(Strike, spy_pos, option_pos)

portfolio <- map(

seq_len(nrow(outcomes)),

~.x %>>%

{outcomes[.,]} %>>%

(strike~{

map(

seq(-50,50,5),

~tibble(

outcome = .x,

value = strike$spy_pos * (1+.x/100) +

max(c(strike$Strike - tail(SPY)[[4]] * (1+.x/100),0)) * 100

)

) %>>%

bind_rows()

})

)

strike_port <- tibble(

strike = outcomes$Strike,

outcomes = map(portfolio, ~.x)

)Plot Outcomes in $

plot(

value ~ outcome,

data = strike_port[1,]$outcomes[[1]],

ylim = c(0,40000),

type = "l"

)

walk(

seq_len(nrow(strike_port)),

~lines(value ~ outcome, data = strike_port[.x,]$outcomes[[1]])

)Make Outcomes Interactive in Plotly

library(plotly)

pltly <- reduce(

1:nrow(strike_port),

function(left, right) {

left %>%

add_lines(

x = strike_port[right,]$outcomes[[1]]$outcome,

y = strike_port[right,]$outcomes[[1]]$value/(100*tail(SPY,1)[[4]]) - 1,

inherit = FALSE,

name = strike_port[right,]$strike

)

},

.init = plot_ly()

)Tuesday, February 28, 2017

Shorting with Reckless Abandon

Tuesday, July 26, 2016

Ooms Magical Polyglot World

Jeroen Ooms (@opencpu) provides R

users a magical polyglot world of R, JavaScript, C, and C++. This is my

attempt to both thank him and highlight some of all that he has done.

Much of my new R depends on his work.

Ooms' Packages

metacran provides a list of all Jeroen's CRAN packages. Now, I wonder if any of his packages are in the Top Downloads.

jsonlite

Let's leverage the helpful meta again from

metacran and very quickly get some assistance

from hint-hint

jsonlite.

library(jsonlite)

library(formattable)

library(tibble)

library(magrittr)

fromJSON("http://cranlogs.r-pkg.org/top/last-month/9") %>%

{as_tibble(rank=rownames(.$downloads),.$downloads)} %>%

rownames_to_column(var = "rank") %>%

format_table(

formatters = list(

area(row=which(.$package=="jsonlite")) ~ formatter("span", style="background-color:#D4F; width:100%")

)

)

| rank | package | downloads |

|---|---|---|

| 1 | Rcpp | 236316 |

| 2 | plyr | 208609 |

| 3 | ggplot2 | 201959 |

| 4 | stringi | 188252 |

| 5 | jsonlite | 175853 |

| 6 | digest | 174714 |

| 7 | stringr | 173835 |

| 8 | magrittr | 166437 |

| 9 | scales | 156694 |

V8

V8 gives R its own embedded

JavaScript engine to leverage functionality in JavaScript that might not

exist in R. For example, the

WebCola constraint-based

layout engine offers valuable technology not available within R. Let's

partially recreate the smallgroups

example

all in R. You might notice that the previously mentioned jsonlite is

essential to this workflow.

library(V8)

library(jsonlite)

library(scales)

ctx = new_context(global="window")

ctx$source("https://cdn.rawgit.com/tgdwyer/WebCola/master/WebCola/cola.min.js")

## [1] "true"

### small grouped example

group_json <- fromJSON(

system.file(

"htmlwidgets/lib/WebCola/examples/graphdata/smallgrouped.json",

package = "colaR"

)

)

# need to get forEach polyfill

ctx$source(

"https://cdnjs.cloudflare.com/ajax/libs/es5-shim/4.1.10/es5-shim.min.js"

)

# code to recreate small group example

js_group <- '

// console.assert does not exists

console = {}

console.assert = function(){};

var width = 960,

height = 500

graph = {

"nodes":[

{"name":"a","width":60,"height":40},

{"name":"b","width":60,"height":40},

{"name":"c","width":60,"height":40},

{"name":"d","width":60,"height":40},

{"name":"e","width":60,"height":40},

{"name":"f","width":60,"height":40},

{"name":"g","width":60,"height":40}

],

"links":[

{"source":1,"target":2},

{"source":2,"target":3},

{"source":3,"target":4},

{"source":0,"target":1},

{"source":2,"target":0},

{"source":3,"target":5},

{"source":0,"target":5}

],

"groups":[

{"leaves":[0], "groups":[1]},

{"leaves":[1,2]},

{"leaves":[3,4]}

]

}

var g_cola = new cola.Layout()

.linkDistance(100)

.avoidOverlaps(true)

.handleDisconnected(false)

.size([width, height]);

g_cola

.nodes(graph.nodes)

.links(graph.links)

.groups(graph.groups)

.start()

'

# run the small group JS code in V8

ctx$eval(js_group)

## [1] "[object Object]"

Now, WebCola has done the hard work and laid out our nodes and links,

so let's get their positions.

nodes <- ctx$get('

graph.nodes.map(function(d){

return {name: d.name, x: d.x, y: d.y, height: d.height, width: d.width};

})

')

links <- ctx$get('

graph.links.map(function(d){

return {x1: d.source.x, y1: d.source.y, x2: d.target.x, y2: d.target.y}

})

')

Some great examples of packages employing V8 are

geojsonio,

lawn,

DiagrammeRsvg,

rmapshaper, and

daff.

rjade

We got layout coordinates above. Let's use another one of Jeroen's

packages rjade that provides

jade (now called

pug) templates through V8. rjade

will let us build a SVG graph with our layout.

library(rjade)

library(htmltools)

svg <- jade_compile(

'

doctype xml

svg(version="1.1",xmlns="http://www.w3.org/2000/svg",xmlns:xlink="http://www.w3.org/1999/xlink",width="960px",height="500px")

each l in lines

line(style={fill:none, stroke:"lightgray"})&attributes({"x1": l.x1, "x2": l.x2, "y1": l.y1, "y2": l.y2})

each val in rects

g

rect(style={fill: fillColor})&attributes({"x": val.x - val.width/2, "y": val.y - val.height/2, "height": val.height - 6, "width": val.width - 6, rx: 5, ry: 5})

text&attributes({"x": val.x, "y": val.y, "dy": ".2em", "text-anchor":"middle"})= val.name

'

,pretty=T

)(rects = nodes, lines = links, fillColor = "lightgray")

HTML(svg)

rsvg

If we are not in the browser though with inline SVG support, we very

likely will want a static image format such as png or jpeg. Of

course, Jeroen has that covered also with the crazy-speedy

rsvg. Jeroen offers

base64, but in this case we

will use base64enc, since it allows raw.

library(rsvg)

library(base64enc)

graph_png <- rsvg_png(charToRaw(svg))

tags$img(src=dataURI(graph_png), mime="image/png")

magick

Jeroen's newest package magick

is in my mind the coolest. magick gives us all the power of

ImageMagick as easy R

functions, and is pure wizardry. I am still shocked that it compiled

first try with absolutely no problems.

library(magick)

graph_img <- image_read(graph_png)

wizard_img <- image_read("http://www.imagemagick.org/image/wizard.png")

images <- image_annotate(

image_append(

c(

image_scale(image_crop(wizard_img, "600x600+100+100"), "100"),

image_crop(graph_img, "400x400+200+0")

)

),

"Ooms is a Wizard!",

size = 20,

color = "blue",

location = "+100+200"

)

tags$img(src=dataURI(image_write(images)), mime="image/png")

commonmark

I should note that this document was assembled in rmarkdown. RStudio

gives us lots of tools for working with rmarkdown, but Jeroen gives us

a powerful tool

commonmark. Let's use it

to give our readers other options for output.

library(commonmark)

rmarkdown::render("Readme.Rmd", "Readme.md", output_format="md_document")

tex <- markdown_latex(readLines("Readme.md"))

cat(tex, file="Readme.tex")

This would convert markdown to LaTeX. As a test, I used commonmark to make the html for this post.

Conclusion and Thanks

There are of course more packages, but I'll stop here. Jeroen Ooms truly

is a wizard, and the R community is extraordinarily blessed to have

him. Thanks so much Jeroen.

For even more wizardry, be sure to check out opencpu from Jeroen, which makes R available as a web service.

Friday, May 22, 2015

visNetwork, Currencies, and Minimum Spanning Trees

Just because I’m ignorant doesn’t mean I won’t try things. Feel free to correct any ignorance that follows. More than anything I would like to feature the new htmlwidget visNetwork. I thought the example from Minimum Spanning Trees in R applied to currency data (similar to this research paper Minimum Spanning Tree Application in the Currency Market) would be a good way to demonstrate this fancy new widget. We’ll grab the currency data from FRED using quantmod code from this old post Eigen-who?.

Code

# get MST using code from this post

# https://mktstk.wordpress.com/2015/01/03/minimum-spanning-trees-in-r/

library(quantmod)

# #get currency data from the FED FRED data series

Korea <- getSymbols("DEXKOUS",src="FRED",auto.assign=FALSE) #load Korea

Malaysia <- getSymbols("DEXMAUS",src="FRED",auto.assign=FALSE) #load Malaysia

Singapore <- getSymbols("DEXSIUS",src="FRED",auto.assign=FALSE) #load Singapore

Taiwan <- getSymbols("DEXTAUS",src="FRED",auto.assign=FALSE) #load Taiwan

China <- getSymbols("DEXCHUS",src="FRED",auto.assign=FALSE) #load China

Japan <- getSymbols("DEXJPUS",src="FRED",auto.assign=FALSE) #load Japan

Thailand <- getSymbols("DEXTHUS",src="FRED",auto.assign=FALSE) #load Thailand

Brazil <- getSymbols("DEXBZUS",src="FRED",auto.assign=FALSE) #load Brazil

Mexico <- getSymbols("DEXMXUS",src="FRED",auto.assign=FALSE) #load Mexico

India <- getSymbols("DEXINUS",src="FRED",auto.assign=FALSE) #load India

USDOther <- getSymbols("DTWEXO",src="FRED",auto.assign=FALSE) #load US Dollar Other Trading Partners

USDBroad <- getSymbols("DTWEXB",src="FRED",auto.assign=FALSE) #load US Dollar Broad

#combine all the currencies into one big currency xts

currencies<-merge(Korea, Malaysia, Singapore, Taiwan,

China, Japan, Thailand, Brazil, Mexico, India,

USDOther, USDBroad)

currencies<-na.omit(currencies)

colnames(currencies)<-c("Korea", "Malaysia", "Singapore", "Taiwan",

"China", "Japan", "Thailand", "Brazil", "Mexico", "India",

"USDOther", "USDBroad")

#get daily percent changes

currencies <- currencies/lag(currencies)-1

currencies[1,] <- 0

cor.distance <- cor(currencies)

corrplot::corrplot(cor.distance)

library(igraph)

g1 <- graph.adjacency(cor.distance, weighted = T, mode = "undirected", add.colnames = "label")

mst <- minimum.spanning.tree(g1)

plot(mst)

library(visNetwork)

mst_df <- get.data.frame( mst, what = "both" )

visNetwork(

data.frame(

id = 1:nrow(mst_df$vertices)

,label = mst_df$vertices

)

, mst_df$edges

) %>%

visOptions( highlightNearest = TRUE, navigation = T )

Wednesday, March 11, 2015

Extracting Heatmap

Inspired by this tweet, I wanted to try to do something similar in JavaScript.

Very cool hack: Extracting the original data from a heatmap image with R vector ops #rstats http://t.co/Lbi6FCXdrI pic.twitter.com/LCabkMGjXY

— Gregory Piatetsky (@kdnuggets) March 6, 2015 Fortunately, I had this old post Chart from R + Color from Javascript to serve as a reference, and I got lots of help from these links.

- http://stackoverflow.com/questions/6735470/get-pixel-color-from-canvas-on-mouseover

- http://bl.ocks.org/jinroh/4666920

- https://github.com/dtao/nearest-color

- https://github.com/Nycto/PicoModal

In a couple of hours, I got this crude but working rendering complete with a d3.js brush to get the scale. Then since this is sort of a finance blog, I imagined we found an old correlation heatmap like the one in Pretty Correlation Map of PIMCO Funds. Although, we could guess at the correlation values, I thought it would be a lot more fun to get live values. Try it out below.

- Brush over the scale / legend

- Input scale min and max

- Mouseover color areas in the chart

As I said, it is rough, but it works. It needs a little UI work :)

Thursday, March 5, 2015

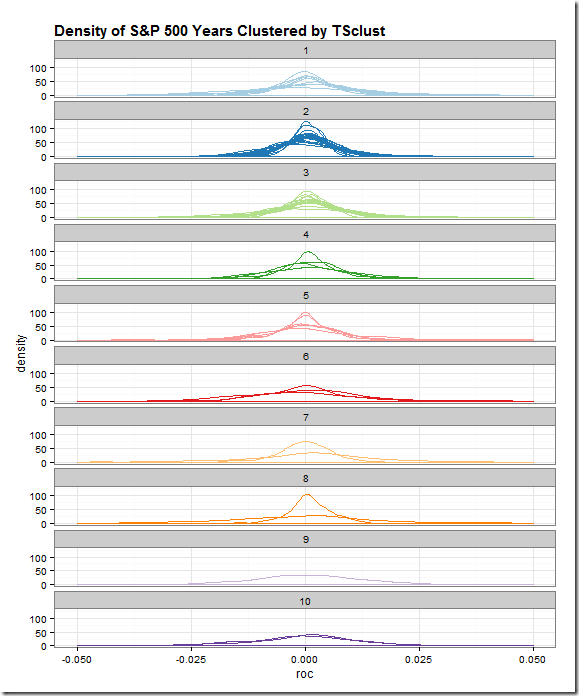

Is Time Series Clustering Meaningless? (lots of dplyr)

A kind reader directed me in a comment on Experiments in Time Series Clustering to this paper.

Clustering of Time Series Subsequences is Meaningless: Implications for Previous and Future Research

Eamonn Keogh and Jessica Lin

Computer Science & Engineering Department University of California – Riverside

As I said in my last post, I don’t know what I’m doing, so I have no basis for discussing or arguing time series clustering. After reading the paper a couple of times, I think I understand their points, and I do not think what I am doing is “meaningless”. In their financial time series examples, they use prices and speak of trying to find patterns. I simply want to classify which years are most alike by various characteristics, such as autocorrelation of returns not prices, distribution of returns, and all sorts of other classifiers.

More than anything this whole exercise gave me a good excuse to dig much, much deeper. Iongtime readers might be wondering where are the interactive plots. I wanted to share what I have done so far hoping that readers might elaborate, argue, or point me in good directions.

Regardless of your interest in time series clustering, you might enjoy the dplyr and piping that I used to generate the results. Also, I have not seen dplyr do applied to autocorrelation ACF, so you might want to check that out in the last snippet of code.

All of the code for this post and last post is in this Github repo.

library(TSclust)

library(quantmod)

library(dplyr)

library(pipeR)

library(tidyr)

sp5 <- getSymbols("^GSPC",auto.assign=F,from="1900-01-01")[,4]

sp5 %>>%

# dplyr doesn't like xts, so make a data.frame

(

data.frame(

date = index(.)

,price = .[,1,drop=T]

)

) %>>%

# add a column for Year

mutate( year = as.numeric(format(date,"%Y"))) %>>%

# group by our new Year column

group_by( year ) %>>%

# within each year, find what day in the year so we can join

mutate( pos = rank(date) ) %>>%

mutate( roc = price/lag(price,k=1) - 1 ) %>>%

# can remove date

select( -c(date,price) ) %>>%

as.data.frame %>>%

# years as columns as pos as row

spread( year, roc ) %>>%

# remove last year since assume not complete

( .[,-ncol(.)] ) %>>%

# remove pos since index will be same

select( -pos ) %>>%

# fill nas with previous value

na.fill( 0 ) %>>%

t %>>%

(~sp_wide) %>>%

# use TSclust diss; notes lots of METHOD options

diss( METHOD="ACF" ) %>>%

hclust %>>%

(~hc) %>>%

ape::as.phylo() %>>%

treewidget #%>>%

#htmlwidgets::as.iframe(file="index.html",selfcontained=F,libdir = "./lib")

library(lattice)

library(ggplot2)

# get wide to long the hard way

# could have easily changed to above pipe to save long

# as an intermediate step

# but this makes for a fun lapply

# and also we can add in our cluster here

sp_wide %>>%

(

lapply(

rownames(.)

,function(yr){

data.frame(

year = as.Date(paste0(yr,"-01-01"),"%Y-%m-%d")

,cluster = cutree(hc,10)[yr]

,pos = 1:length(.[yr,])

,roc = .[yr,]

)

}

)

) %>>%

(do.call(rbind,.)) %>>%

(~sp_long)

sp_long %>>%

ggplot( aes( x = roc, group = year, color = factor(cluster) ) ) %>>%

+ geom_density() %>>%

+ facet_wrap( ~ cluster, ncol = 1 ) %>>%

+ xlim(-0.05,0.05) %>>%

+ labs(title='Density of S&P 500 Years Clustered by TSclust') %>>%

+ theme_bw() %>>%

# thanks to my friend Zev Ross for his cheatsheet

+ theme( plot.title = element_text(size=15, face="bold", hjust=0) ) %>>%

+ theme( legend.position="none" ) %>>%

+ scale_color_brewer( palette="Paired" )

# explore autocorrelations

sp5 %>>%

# dplyr doesn't like xts, so make a data.frame

(

data.frame(

date = index(.)

,price = .[,1,drop=T]

)

) %>>%

# add a column for Year

mutate( year = as.numeric(format(date,"%Y"))) %>>%

# group by our new Year column

group_by( year ) %>>%

# within each year, find what day in the year so we can join

mutate( pos = rank(date) ) %>>%

mutate( roc = price/lag(price,k=1) - 1 ) %>>%

# can remove date

select( -c(date,price) ) %>>%

as.data.frame %>>%

# years as columns as pos as row

spread( year, roc ) %>>%

# remove last year since assume not complete

( .[,-ncol(.)] ) %>>% t -> sP

sp_long %>>%

group_by( cluster, year ) %>>%

do(

. %>>%

(

clustd ~

acf(clustd$roc,plot=F) %>>%

(a ~

data.frame(

cluster = clustd[1,2]

,year = clustd[1,1]

,lag = a$lag[-1]

,acf = a$acf[-1]

)

)

)

) %>>%

as.data.frame %>>%

ggplot( aes( x = factor(cluster), y = acf, color = factor(cluster) ) ) %>>%

+ geom_point() %>>%

+ facet_wrap( ~lag, ncol = 4 ) %>>%

+ labs(title='ACF of S&P 500 Years Clustered by TSclust') %>>%

+ theme_bw() %>>%

# thanks to my friend Zev Ross for his cheatsheet

+ theme(

plot.title = element_text(size=15, face="bold", hjust=0)

,legend.title=element_blank()

) %>>%

+ theme(legend.position="none") %>>%

+ scale_color_brewer(palette="Paired")

If you’ve made it this far, I would love to hear from you.